A Pilot Study of Translating Music into Expressive Finger Dance Videos

Abstract

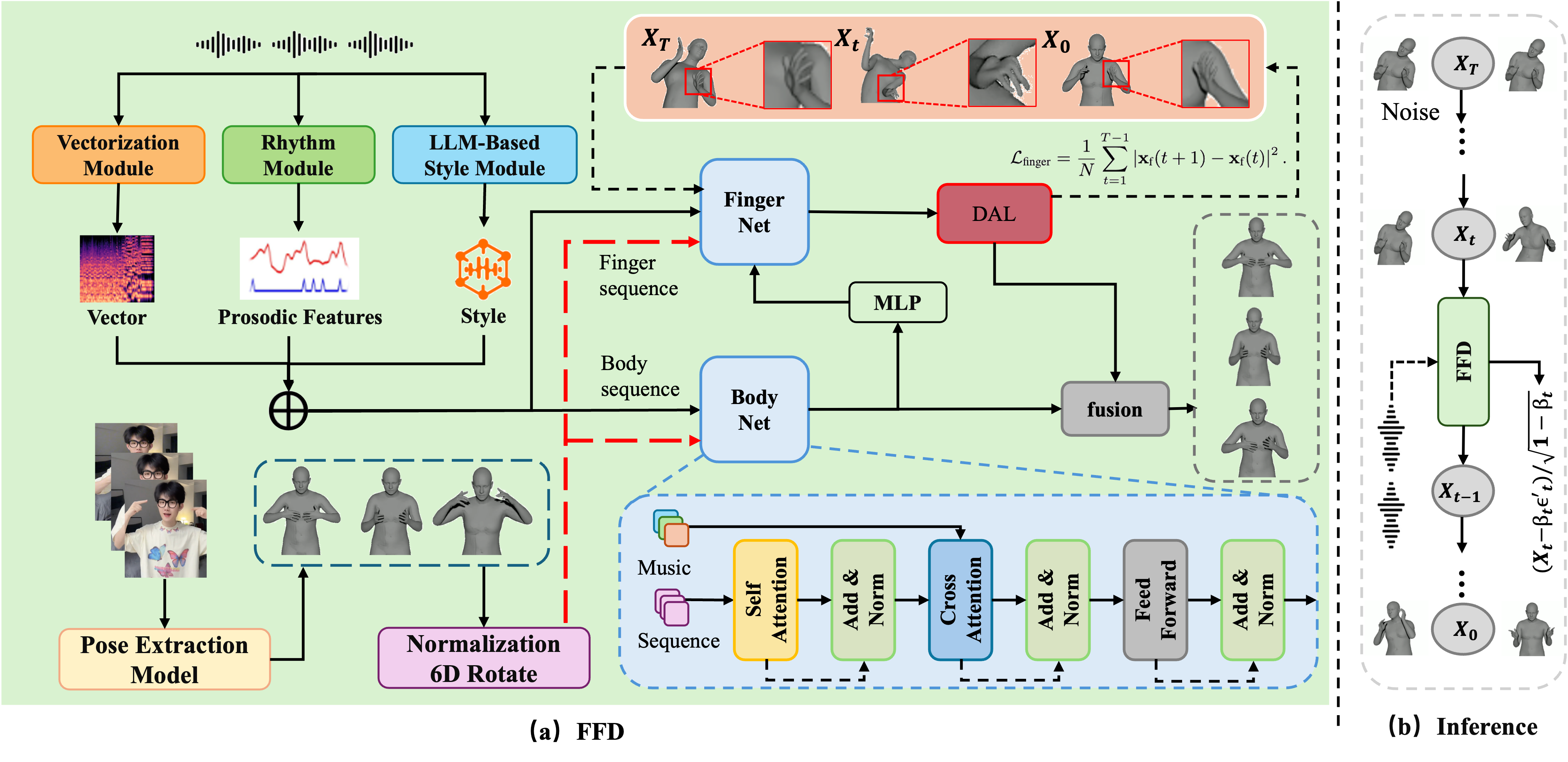

Finger dance, a form of expressive dance performed using only the hand fingers and arm gestures, has recently gained significant popularity on social media platforms. However, compared to general music-to-dance generation, synthesizing fine-grained accurate finger dance poses a greater challenge, and this domain remains an unexplored research area. Previous methods for music-driven motion generation often struggle to capture the subtle, fine-grained movements characteristic of finger dance, resulting in performances To tackle the challenge of generating expressive and detailed finger movements synchronized with music, we propose Fine-Finger Diffusion (FFD), a novel approach that maps music to fine-grained finger dance motions. FFD leverages a diffusion model to generate realistic and stable finger movements while capturing rhythmic variations. To enhance motion smoothness and coherence, we introduce a detail-aware loss (DAL) that penalizes excessive motion between consecutive frames. Importantly, we introduce the first large-scale, finger dance video dataset DanceFingers-4K, which serves as a foundational benchmark in this task for further research. Furthermore, extensive quantitative and qualitative experiments, including a user study are conducted. Our method outperforms existing approaches on multiple quantitative metrics. To the best of our knowledge, this is the first end-to-end deep learning framework for music-driven finger dance generation, holding significant implications for both exploring this specific task and advancing fine-grained motion generation research.